Should prey help predators by cooperating with them in the hunt? A Light Reading story on the Broadband World Forum conference highlights the view that operators want the telecom equipment vendors to “get more involved in open source projects instead of sitting on the sidelines.” Sorry, but that sure sounds to me like proposing a cheetah ask a gazelle to slow down a bit. Operators need to accept the basic truth that open-source tools and other transformation projects that cut operator costs are also cutting vendor revenue.

The problem here, according to operators who’ve opened up to me, is that the operators don’t know what else to do. They’ve proved, in countless initiatives over the last fifteen years, that they have no skill in driving open-source software, or even driving standards initiatives that are based on software rather than on traditional boxes. I’ve criticized the operators for their failure to contribute effectively to their own success, but maybe it’s time to recognize that they can’t help it.

Back in my early days as a software architect, I was working with a very vocal line department manager who believed that his organization had to take control of their destiny. The problem was that he had nobody in the organization who knew anything about automating his operation. His solution was to get a headhunter to line up some interviews, and he hired his “best candidate.” When he came to me happily to say “I just hired the best methods analyst out there,” I asked (innocently) “How did you recognize him?”

If you don’t have the skill, how can you recognize who does? That’s the basic dilemma of the operators. In technology terms, the world of network transformation is made up of two groups. The first, the router-heads, think of the world in terms of IP networks built from purpose-built appliances. The second, the cloud-heads, think of the world in terms of virtualized elements and resource pools. Operators’ technologists fall into the first camp, and if you believe that software instances of network features are the way of the future, you need people from the second. Who recognizes them in an interview?

It’s worse than that. I’ve had a dozen cloud types contact me in 2020 after having left jobs at network operators, and their common complaint was that they have nowhere to go, no career path. There was no career path for them, because the operators tended to think of their own transformation roles as being transient at best. We need to get this new cloud stuff in, identify vendors or professional services firms who will support it, and then…well, you can always find another job with your skill set.

It’s not that operators don’t use IT, and in some cases even virtualization and the cloud. The problem is that IT skills in most operators are confined to the CIO, the executive lead in charge of OSS/BSS. That application is a world away, in technology terms, from what’s needed to virtualize network features and support transformation. In fact, almost three-quarters of operators tell me that their own organizational structure, which separates OSS/BSS, network operations, and technology testing and evaluation, is a “significant barrier” to transformation.

About a decade ago, operators seemed to suddenly realize they had a problem here, and they created executive-level committees that were supposed to align their various departments with common transformation goals. The concept died out because, according to the operators, they didn’t have the right people to staff the committees.

How about professional services, then? Why would operators not be able to hire somebody to get them into the future without inordinate risk? Some operators are trying that now, and some have already tried it and abandoned the approach. Part of the problem comes back to those open-source projects. Professional services firms are reluctant to spend millions participating in open initiatives that benefit their competitors as much as themselves. Particularly when, if they’re successful, their efforts create products that operators can then adopt on their own.

Why can’t cloud types and box types collaborate? The come at the problem, at least in today’s market, from opposite directions. The box types, as representatives of the operators and buyers, want to describe what they need, which is logical. That description is set within their own frame of reference, so they draw “functional diagrams” that look like old-line monolithic applications. The cloud people, seeing these, convert them into “virtual-box” networks, and so all the innovation is lost.

Here’s a fundamental truth: In networking, it will never be cheaper to virtualize boxes and host the results, than it would be to simply use commodity boxes. The data plane of a network can’t roam around the cloud at will, it needs to be where the trunks terminate or the users connect. We wasted our time with NFV because it could never have succeeded as long as it was focused only on network functions as we know them. The problem is that the network people, the box people, aren’t comfortable with services that live above their familiar connection-level network.

This “mission rejection” may be the biggest problem in helping operators guide their own transformation. You can’t say “Get me from New York City to LA, but don’t cross state lines” and hope to find a route. Operators are asking for a cost-limiting strategy because they’re rejecting everything else, and that’s what puts them in conflict with vendors.

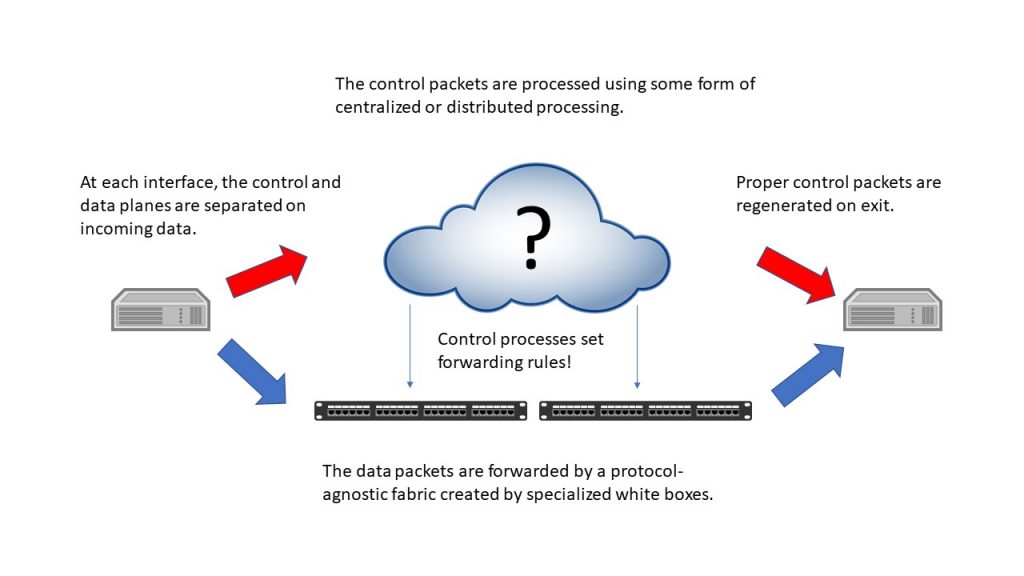

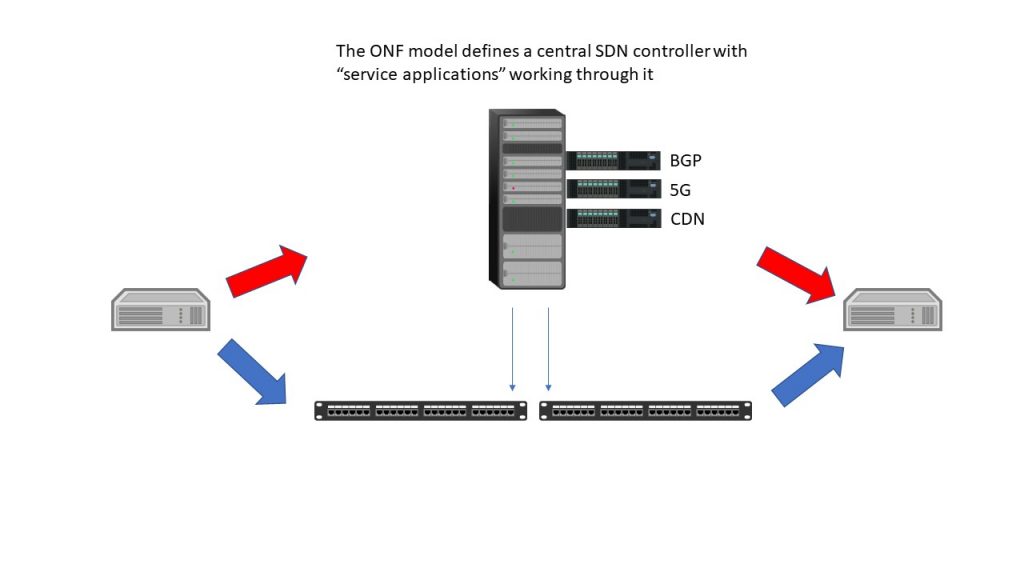

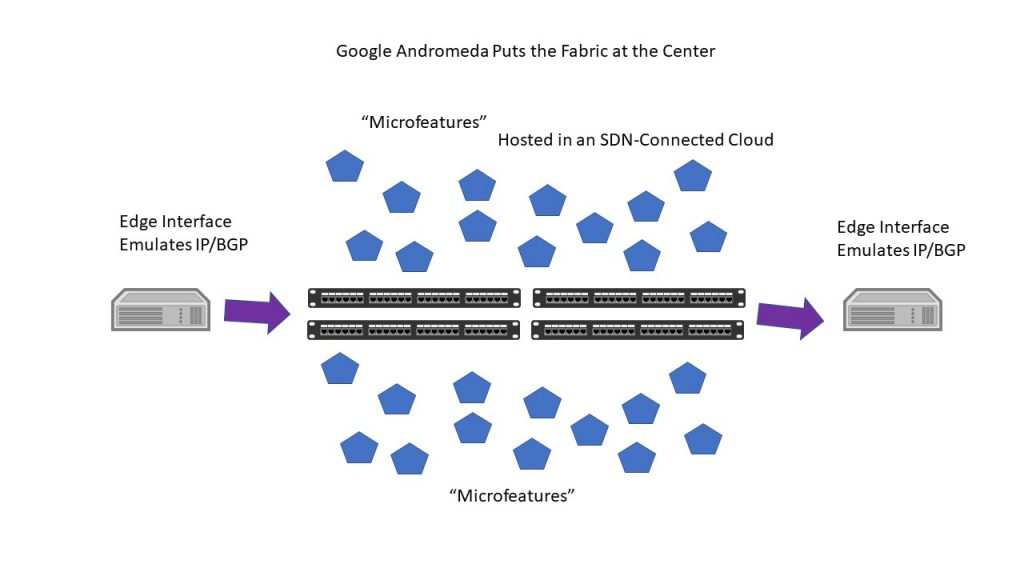

The notion of a separate control plane, and what I’ve called a “supercontrol-plane” might offer some relief from the technical issues that divide box and cloud people. With this approach, the data path stays comfortably seated in boxes (white boxes) and the control plane is lifted up and augmented to offer new service features and opportunities. But here again, can you create cloud/box collaboration on this, when the operators seem to want to sit back and wait for a solution to be presented? When the vendors who can afford to participate in activities designed to “transform” are being asked to work against their own bottom lines, because the transformation will lower operator spending on their equipment?

New players at the table seem a logical solution. Don Clarke, representing work from the Telecom Ecosystems Group, sent me a manifesto on creating a “code of conduct” aimed at injecting innovation into what we have to say is a stagnating mess. I suggested another element to it, the introduction of some form of support and encouragement for innovators to participate from the start in industry initiatives designed to support transformation. I’ve attended quite a few industry group meetings, and if you look out at the audience, you find a bunch of eager but unprepared operator types and a bunch of vendors bent on disrupting changes that would hurt their own interests. We need other faces, because if we don’t start transformation initiatives right, there’s zero chance of correcting them later.

This is one reason why Kevin Dillon and I have formed a LinkedIn group called “The Many Dimensions of Transformation”, which we’ll be using to act as a discussion forum. Kevin and I are also doing a series of podcasts on transformation, and we’ll invite network operators to participate in them where their willing to do so and where it’s congruent with their companies’ policies. A LinkedIn group doesn’t pose a high cost or effort barrier to participation. It can serve as an on-ramp to other initiatives, including open-source groups and standards groups. It can also offer a way of raising literacy on important issues and possible solutions. A community equipped to support insightful exchanges on a topic is the best way to spark innovation on that topic.

Will this help with vendor cooperation? It’s too early to say. We’ve made it clear that the LinkedIn group we’re starting will not accept posts that promote a specific product or service. It’s a forum for exchanges in concepts, not a facilitator of commerce. It’s also possible for moderators to stop disruptive behavior in a forum, where it’s more difficult to do that in a standards group or open-source community, where vendor sponsorship often gives the vendors an overwhelming majority of participants.

The vendor participation and support issue are important, not only because (let’s face it) vendors have a history of manipulating organizations and standards, but because vendors are probably critical in realizing any benefits from new network technology, or any technology, in the near term. Users don’t build, they use. Operators are, in a technology/product sense, users, and they’ve worked for decades within the constraint of building networks from available products, from vendor products.

I’d love to see the “Code of Conduct” initiative bear fruit, because it could expand the community of vendors. I’d also love to see some initiative, somewhere, focus on the ecosystem-building that’s going to be essential if we build the future network from the contribution of a lot of smaller players. You can’t expect to assemble a car if all you’re given is a pile of parts.