How much might carrier cloud impact edge computing? That question may be the most important one to answer for those interested in the edge, because while there’s been a lot of discussion about “drivers” for edge computing, none of the suggestions are actually currently budgeted applications. What’s needed for edge computing to surge is some direct, immediate, financial motivation to deploy it, and a willing player. Are there any? Operators are an obvious choice.

I think that one of the signs that there is at least hope of operator-driven life at the edge is the interest the public cloud providers in promoting carrier cloud partnerships. Recall that my forecasts for carrier cloud have showed that if all opportunities were realized, carrier cloud could justify a hundred thousand incremental data centers, with over 90% at the edge. That would obviously dwarf the number of public cloud data centers we have today. No wonder there’s a stream of stories on how cloud providers are looking for partners among the network operators.

It’s also true that some companies are seeing edge computing arising from telecom, but not associated with carrier cloud at all. IBM and Samsung are linking their efforts to promote a tie between edge computing and private 5G. This initiative seems to demonstrate that there’s a broad view that 5G and edge computing are related. It also shows that there is at least the potential for significant edge activity, enough to be worth chasing.

My original modeling on carrier cloud suggested that the edge opportunity generated by hosting 5G control-plane features would account for only a bit less than 15% of edge opportunity by 2030. In contrast, personalization and contextualization services (including IoT) account for over 52% of that opportunity. That means that 5G may be more important to edge computing as an early justifier of deployment than as a long-term sustaining force.

As I noted last week in my blog about Google’s cloud, I noted that Google’s horizontal-and-vertical applications partnerships were aimed at moving cloud providers into carrier partnerships beyond hosting 5G features. Might Google be moving to seize that 52% driver for carrier cloud, and maybe 52% of the edge deployments my model suggests could be justified? Sure it could, and more than “could”, I’d say “likely”. The question is whether there’s another way.

The underlying challenge with edge computing is getting an edge deployed to compute with. By definition, an edge resource is near the point of activity, the user. Unless you want to visualize a bunch of edge users huddling around a few early edge locations, you need to think about getting a lot of edge resources out there just to create a credible framework on which applications could run. My model says that to do that in the US, you’d need about 20,000 edge points, which creates both a challenge in having a place to install all these servers, and in getting money to pay for them.

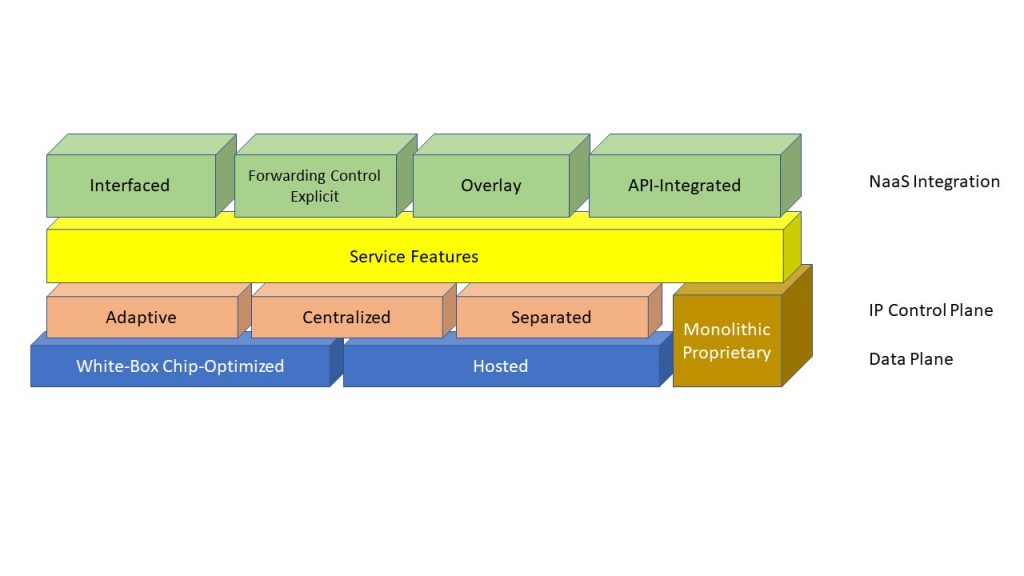

The nice thing about hosting 5G features is that those features could be consumed in proximity to all of those 20,000 locations. If a 5G technology model dependent on edge hosting were to deploy, funded by the 5G budgets, then we’d have a substantial edge footprint to exploit with other applications. However, a cursory examination of 5G and Open RAN shows that it’s more likely that the RAN components of 5G would be hosted in white boxes at the radio edge than deeper in. First, the white-box approach scales costs more in sync with service deployment. AT&T has already proved that. Second, while the operators who are offering 5G in the region where they also offer wireline services have facilities to host in, other operators don’t, and probably won’t want to buy facilities.

While 5G could directly stimulate edge deployment, then, it seems likely that unless there’s a perception that those edge resources will expand into multiple missions, operators could easily just use white-box devices to host 5G and eliminate the cost and risk of that preemptive 20,000-site deployment. That gets us back to what comes next, and raises the question of whether Google’s vertical-and-horizontal application-specific approach is the only, or best, answer.

The problem with a specific application-driven set to drive edge computing is that it risks “overbuild”. Consider this example. Suppose there were no database management systems available. Every application that needed a database would then have to write one from raw disk I/O upward. The effort would surely make most applications either prohibitively expensive for users or unprofitable for sellers. “Middleware” is essential in that it codifies widely needed facilities for reuse, reducing development cost and time. So, ask the following question: Where is the middleware for that 52% of drivers for edge computing? Answer: There isn’t any.

IoT as a vast collection of sensors waiting to be queried is as stupid a foundation for development as raw disk I/O is. We need to define some middleware model for IoT and other personalization/contextualization services. Given that middleware, we could then hope to jumpstart the deployment of services that would really justify those 20,000 edge data centers, not just provide something that might run on them if they magically deployed.

Personalization and contextualization services relating to edge computing opportunity focus on providing a link between an application user and the user’s real-world and virtual-world environments. A simple example is a service that refines a search for a product or service to reflect the location of the person doing the searching, or that presents ads based on that same paradigm. I’ve proposed in the past that we visualize these services as “information fields” asserted by things like shops or intersections, and that applications read and correlate these fields.

My “information fields” approach isn’t likely the only way to solve the middleware problem for edge computing, but it is a model that we could use to demonstrate a middleware option. Were such a concept in place, applications would be written to consume the fields and not the raw data needed to create the same level of information. Edge resources could then produce the fields once, for consumption by anything that ran there, and could communicate fields with each other rather than trying to create a wide sensor community.

There are multiple DBMS models today, each with its own specific value, and I suspect that there will be multiple models for personalization/contextualization middleware. What I’d like to see is a model that could create some 5G features as well as applications, because that model could stimulate edge deployment using 5G infrastructure as a justifier, and still generate something that could be repurposed to support the broader, larger, class of edge opportunity drivers.

It’s hard to say where this model might come from, though. Google, I think, is working to accelerate edge application growth and generate some real-world PR in the near term. That means they’d be unlikely to wait until they could frame out personalization/contextualization middleware, then try to convince developers to make their applications support it. A Google competitor? An open-source software player like Red Hat or VMware? A platform player like HPE? Who knows?

Without something like this, without a middleware or as-a-service framework within which edge applications can be fit, it’s going to be very difficult to build momentum for edge computing. The biggest risk is that, lacking any such tools, some candidate applications will migrate from “cloud edge” into the IoT ecosystem, as I’ve said will happen with connected car for other reasons. A simple IoT controller, combined with a proper architecture for converting events into transactions, could significantly reduce the value of an intermediate edge hosting point. Whether that’s good or bad in the near term depends on whether you have a stake in the edge. In the long term, I think we’ll lose a lot of value without edge hosting, so an architecture for this would be a big step forward.